The full paths will contain more slashes, but we know that only the first 2 slash separators, the ones we specify explicitely in the -printf format, are ours. The -printf action of the find command can give you that: find all of -type f(ile), and print their size %s, filename %f and full path %p, all separated by /. As a consequence, you can use the / as a separator between these 3 fields.

It is a convenience that the slash / is not a valid filename character: it is reserved for separating directorynames and filenames. Now you want to use 2 key fields: size and filename, and you need the full path as well. With the command substition construct $(.) you can assign that name to a variable named tmp or whatever. The command mktemp will print a safely unique filename in the /tmp/ directory for you. I can use a temporary file for that named pipes and fifos and such do not require less work for this simple job. You want to use the output of the first sort twice. Then sort a second time with the unique option, so that a compare of both results gives you the duplicates. But because you indicate you have a large file collection, I suggest to go for sorting instead.

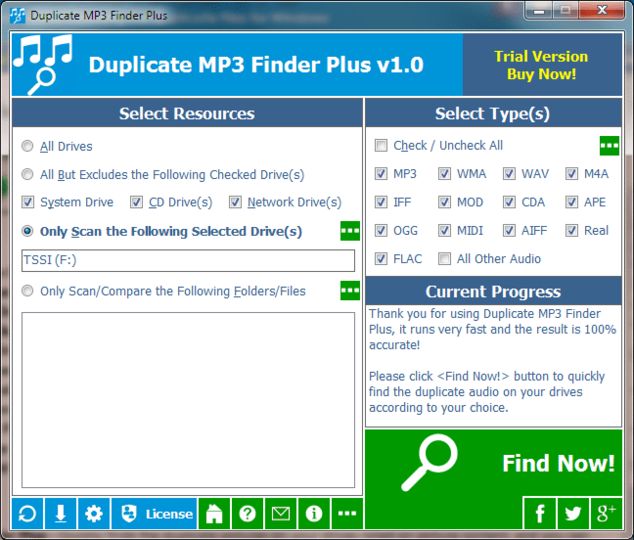

#Mp3 duplicate finder review plus#

To detect duplicates, you might store all file paths in an associative array, keyed on size plus filename. You can go quite some distance by chaining a set of standard commands, that you can always remember by storing them in a shell script or function. You really do not need external utilities for this. Let us know if that's what you want - I don't think it's terribly difficult, but there's not much point if rdfind meets your needs. I don't know of a tool that filters on filesize, but if I were creating one from scratch, I think I'd cobble something together using find and awk.

The hash filter will use far more resources than file size filter, but that may not be a concern if you filter duplicates only occasionally.īefore you actually run rdfind, make sure to read man rdfind thoroughly and use the -dryrun option until you're confident the results are what you want.įWIW, this tutorial lists rdfind, and 3 other utilities for finding duplicate files. For example, consider all of the metadata in a music file: If one file listed Schubert as the composer, and the other potential duplicate listed Bruckner as the composer and everything else in the file were exactly the same, the file size filter would classify it as a match, but the hash filter would not. The hash is a stronger criteria than filesize, but this may or may not be what you want. Rdfind may do what you want, but you'll need to depend on a cryptographic hash/checksum (md5, sha1 or sha256) in lieu of the filesize.

0 kommentar(er)

0 kommentar(er)